Thread scheduling in Python is a crucial concept for developers looking to improve the performance of their applications by leveraging concurrency. This guide delves into the nuances of thread scheduling, including Python’s threading module, the Global Interpreter Lock (GIL), and various synchronization mechanisms.

What is Thread Scheduling?

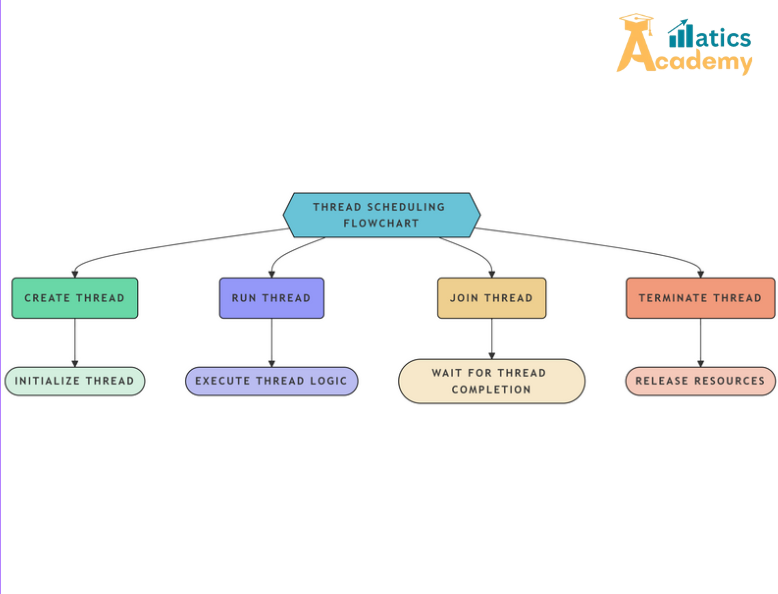

Thread scheduling determines the execution order of threads in a multi-threaded program. In Python, the threading module is commonly used for creating and managing threads. However, the Global Interpreter Lock (GIL) can limit true parallel execution in CPython.

The Global Interpreter Lock (GIL)

The GIL is a mutex that protects access to Python objects, ensuring thread safety. While it simplifies Python’s memory management, it can be a bottleneck for CPU-bound tasks. For I/O-bound tasks, the GIL’s impact is minimal as the threads often wait for external resources.

Creating Threads in Python

Python’s threading module makes it easy to create and manage threads. Here’s a simple example:

import threading

def print_numbers():

for i in range(5):

print(f"Thread {threading.current_thread().name}: {i}")

# Creating threads

thread1 = threading.Thread(target=print_numbers, name="Thread-1")

thread2 = threading.Thread(target=print_numbers, name="Thread-2")

# Starting threads

thread1.start()

thread2.start()

# Waiting for threads to finish

thread1.join()

thread2.join()

Thread Scheduling in Python (Policies)

Python threads rely on the operating system’s thread scheduler that scheduling in python, which may follow different policies such as:

- First-Come, First-Served (FCFS)

- Round Robin

- Priority Scheduling

The scheduler switches between threads based on a combination of these policies and the GIL.

Synchronization Mechanisms

When multiple threads access shared resources, synchronization ensures consistency. Python provides the following mechanisms:

1. Locks

A lock ensures that only one thread accesses a critical section at a time.

lock = threading.Lock()

def increment_counter(counter):

with lock:

counter[0] += 1

counter = [0]

threads = [threading.Thread(target=increment_counter, args=(counter,)) for _ in range(5)]

for thread in threads:

thread.start()

for thread in threads:

thread.join()

print(f"Final Counter Value: {counter[0]}")

2. RLocks (Reentrant Locks)

RLocks allow a thread to acquire a lock multiple times.

coderlock = threading.RLock()

def task():

with rlock:

print("Acquired RLock")

thread = threading.Thread(target=task)

thread.start()

thread.join()

3. Semaphores

Semaphores manage access to a resource pool.

semaphore = threading.Semaphore(2)

def access_resource(thread_id):

with semaphore:

print(f"Thread {thread_id} accessing resource")

threads = [threading.Thread(target=access_resource, args=(i,)) for i in range(5)]

for thread in threads:

thread.start()

for thread in threads:

thread.join()

4. Condition Variables

Condition variables synchronize threads based on specific conditions.

condition = threading.Condition()

def worker():

with condition:

print("Waiting for signal")

condition.wait()

print("Received signal")

thread = threading.Thread(target=worker)

thread.start()

with condition:

print("Sending signal")

condition.notify()

thread.join()

Thread Safety in Python(Scheduling in python)

Python’s built-in data structures, like lists and dictionaries, are not thread-safe. Use synchronization primitives or thread-safe libraries, such as queue.Queue, for shared data.

Multithreading vs. Multiprocessing

For CPU-bound tasks, Python’s multiprocessing module is often preferred as it bypasses the GIL, allowing true parallelism.

from multiprocessing import Process

def cpu_intensive_task():

print(f"Process {Process().name} running")

processes = [Process(target=cpu_intensive_task) for _ in range(3)]

for process in processes:

process.start()

for process in processes:

process.join()

Debugging and Profiling Threads

Tools like threading.enumerate() and threading.get_ident() help debug and monitor thread behavior. For profiling, Python’s cProfile module can provide valuable insights.

Mini Project(scheduling in python ): Simulated Multitasking with Threads

In this project, we simulate multitasking where multiple tasks are scheduled using threads. The tasks will represent various I/O-bound operations, like downloading files, while the main program continues running.

Mini Project Code

Note: this project is not run in online compiler, Try this code in IDE or Interpreter(VS code or Pycharm)

import threading

import time

def download_file(file):

print(f"Started downloading {file}")

time.sleep(2) # Simulate file download time

print(f"Finished downloading {file}")

files = ["file1.txt", "file2.txt", "file3.txt"]

# Create threads for downloading files

threads = []

for file in files:

thread = threading.Thread(target=download_file, args=(file,))

threads.append(thread)

thread.start()

# Wait for all threads to finish

for thread in threads:

thread.join()

print("All files downloaded.")Interview Questions for “Amazon

1.How does Python’s Global Interpreter Lock (GIL) affect thread scheduling?

Answer: The GIL ensures only one thread executes Python bytecode at a time, limiting true parallelism in CPU-bound tasks.

2.What type of tasks benefit most from Python’s threading model?

Answer: I/O-bound tasks, such as file downloads, network communication, and database access, benefit most from threading in Python.

3.How does the Python thread scheduler decide which thread to run?

Answer: The Python thread scheduler uses a preemptive scheduling in python model, giving threads CPU time based on OS priorities and thread availability.

4.What is preemptive scheduling in Python threading?

Answer: Preemptive scheduling means that the OS can interrupt a running thread to allocate CPU time to another thread.

5.Can Python threads run in parallel?

Answer: In CPU-bound tasks, threads do not run in parallel due to the GIL. However, in I/O-bound tasks, threads run concurrently.